Anyone who has worked with Kinect will tell you that the information you get out of the sensor is somewhat noisy. This is particularly problematic if you’re doing avateering, you can’t just apply it directly to a model - you’ll need to do some filtering, such as double Holt exponential smoothing.

We were nearing the deadline for an interactive installation, then we got a very odd report: the client said that while everything was going perfect for 99% of the cases, when a particular QA user tried the application the avatar legs suddenly started wildly kicking around.

This made no sense to us - the algorithms we were using were in no way person-specific. I asked them to send us some video of how the render looked and sure enough, the legs were flailing around as an epileptic octopus.

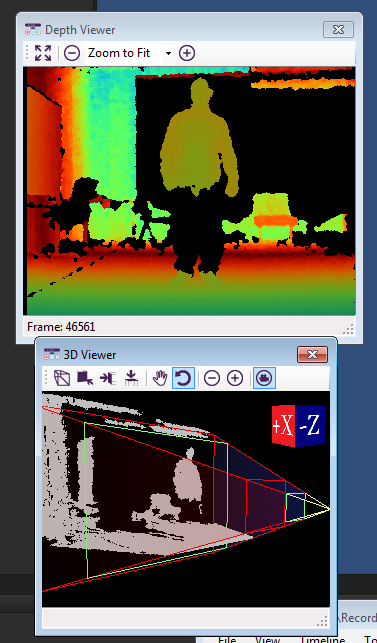

Intrigued, we moved to the next step in debugging and checked a recording of the raw Kinect data. This immediately showed us what the problem was: Kinect was seeing the user as a floating torso.

What gives?

If you’re not familiar with Kinect recordings, the color of a pixel represents how close the Kinect sees something at that point in space. A completely black pixel means that there is nothing there in Kinect’s range - for instance, there might be a wall that’s too far to see.

To understand what might be causing an issue like this, you have to remember how Kinect sees a scene. It effectively casts a stream of lights out, and waits to see how long it takes for it to get back - the longer it took, the further away an item is.

So why does Kinect think our QA user have no legs?

Turns out the answer was trivial: he liked wearing baggy leather pants.

When Kinect’s stream of light hit his leather pants, the light spreads around, and Kinect’s perception of that area gets smudged. It then decides that it has no idea what’s there or how far it is.

It does see a human torso, head and arms, so it’ll inform the application that there is a body. Since it can’t see the legs, though, their position will be inferred. That’s another word for guess. And apparently it wasn’t doing a very good job at this guessing.

The problem turned out to be easy to solve: we added a heuristic where if more than a certain number of major joints in a limb were being inferred, we just expected that Kinect did not have a clear view of the limb. Depending on how many joints were affected, we either increased the smoothing or just locked down the limb altogether on a neutral position.

Next time you are running a Kinect-driven system and find you have issues with a particular user, check what they’re wearing!